Google’s Answer to Voice Search

Google just announced one of the biggest leaps forward in the history of Search: The BERT Algorithm update.

Not the lovable Sesame Street character but a machine learning, natural language processing, resource heavy addition to the search family of algorithms.

Why do I say family? Just 5 years ago, the last major update was RankBrain, a machine learning model that helps define the top search results from the myriad of options. It was a big change because if something is relevant and correct enough to get into the top 10, RankBrain is the algorithm that decides which search result ranks #1 versus #3, #7, or beyond.

Now layer BERT on top of RankBrain, and you get better results then ever before. So even though BERT is described as an update, it’s actually a deeper layer to help surface the most relevant search results.

At its core, search is about understanding language and the real change with BERT is what they call ‘transformers’. No, not autobots or decepticons, tranformers in this case are words that change the meaning of a sentence.

Transformers are words like “for” and “to” that in the right place and order, can transform the meaning of the sentence. Now with BERT Google will be able to better understand the context of the words you say.

This context and these words are important in voice search, where the average number of words used in a voice search is 7 or 8, versus 2 or 3 in a typed search. They are one of the things that makes English a tricky language to learn.

The example that Google gives on it’s blog is as follows:

Here’s a search for “2019 brazil traveler to usa need a visa.” The word “to” and its relationship to the other words in the query are particularly important to understanding the meaning. It’s about a Brazilian traveling to the U.S., and not the other way around.

Previously, Google wouldn’t understand the importance of this connection, and returned results about U.S. citizens traveling to Brazil. With BERT, Search is able to grasp this nuance and know that the very common word “to” actually matters a lot here, and we can provide a much more relevant result for this query.

Or another example: “do estheticians stand a lot at work.” Previously, Google was taking an approach of matching keywords, matching the term “stand-alone” for the word “stand”. But that isn’t the right use of the word “stand” in context. The newly trained BERT models, on the other hand, understand that “stand” is related to the concept of the physical demands of a job, and displays a more useful response.

So why should we care? Google sees billions of searches every day, and 15 percent of those searches are ones never seen before–so they’ve had to build ways to return results for queries they can’t anticipate.

Not only that, BERT will help Google Search better understand one in 10 searches in the U.S. in English with plans to roll out more over time. Google is applying BERT models to both ranking and featured snippets in Search, so this is huge.

How Do You Adapt Your SEO Strategy For BERT?

You may or may not be seeing a drop in your search traffic. If you are, that could be the result of the BERT update. It is designed to fix the incorrect contextual use of transformers. Or put another way, your site was showing up for searches it wasn’t supposed to because of the sheer authority of your site.

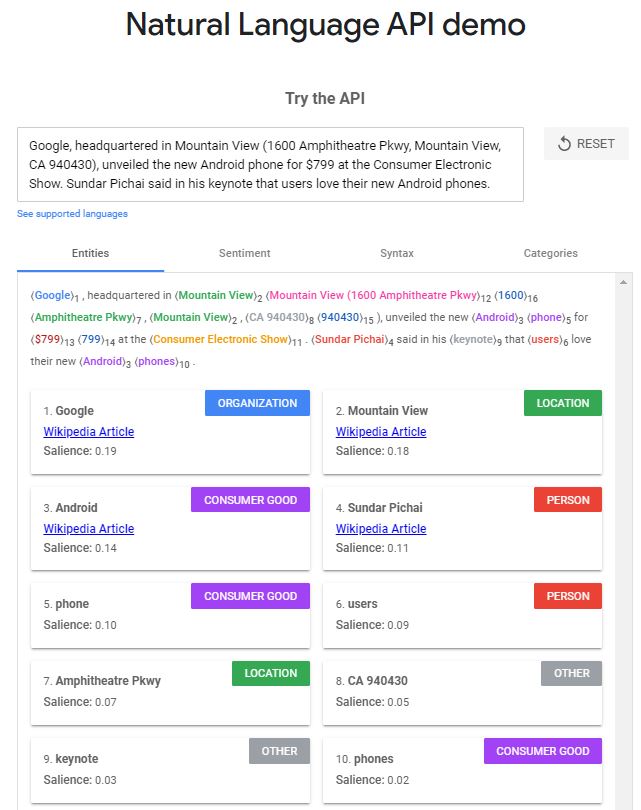

To do a technical check, you can use Google’s Natural Language API Demo tool

With the tool, you can enter text and Google’s API shows you how it understands that text.

Now before you go changing everything on your site and shredding your content plan, history has shown that these updates are often rolled out with unknown consequences, and some updates have been modified or even rolled back.

Google wants you to keep coming back to their site. Their value proposition is they serve you the most relevant search results, so you keep coming back. If this update screws that up, they will fix it.

If everything checks out, our recommendation is keep doing what you’ve always done. Build products, content, and tools your customers want. There used to be a time when you would have to write for two audiences: The human and the machine. With BERT Google is learning to listen like a human. So speak to the humans, but keep in mind the machine.

The good news is like humans, Google isn’t perfect yet even with BERT and RankBrain. If for example you search for “what state is south of Nebraska,” BERT’s best guess is a community called “South Nebraska.” (If you’ve got a feeling it’s not in Kansas, you’re right.)

I find it extremely interesting that Google refers to the search results in the noun form. That “BERT” itself has a best guess.

Makes me feel like I’m not in Kansas anymore either Toto.

P.S. If you’re wondering what BERT stands for, you’re not alone. In 2018 Google introduced and open-sourced a neural network-based technique for natural language processing (NLP) pre-training called Bidirectional Encoder Representations from Transformers, or as they called it–BERT, for short. This technology enabled anyone to train their own state-of-the-art question answering system. Google took the data, and applied it to search.

About The Author

Dave Burnett

I help people make more money online.

Over the years I’ve had lots of fun working with thousands of brands and helping them distribute millions of promotional products and implement multinational rewards and incentive programs.

Now I’m helping great marketers turn their products and services into sustainable online businesses.

How can I help you?